In the entire world of AI-driven advancement, code generation methods have rapidly progressed, allowing developers to automate significant servings of their work flow. One critical concern in this domain is ensuring the reliability and functionality regarding AI-generated code, particularly with regards to its aesthetic output. This is definitely where automated aesthetic testing plays an important role. By integrating visual testing straight into the development canal, organizations can enhance the reliability with their AI code generation systems, ensuring uniformity in the visual appeal and behavior involving user interfaces (UIs), graphical elements, and other visually-driven components.

In this article, we will explore the various techniques, tools, and best practices with regard to automating visual assessment in AI program code generation, highlighting just how this approach assures quality and increases efficiency.

Why Image Testing for AJAI Code Generation is vital

With the expanding complexity of modern applications, AI code generation models will be often tasked using creating UIs, graphical elements, and in fact design layouts. These kinds of generated codes need to align with expected visual outcomes, regardless of whether they are intended for web interfaces, mobile phone apps, as well as application dashboards. Traditional tests methods may confirm functional accuracy, yet they often fall short when it comes to validating visible consistency and customer experience (UX).

Automatic visual testing makes certain that:

UIs behave and appearance as intended: Generated code must produce UIs that match the intended patterns when it comes to layout, shade schemes, typography, and interactions.

Cross-browser match ups: The visual result must remain regular across different windows and devices.

Image regressions are found early: As updates are made to the AI models or maybe the design technique, visual differences can easily be detected just before they impact the conclusion user.

Key Techniques for Automating Visual Testing in AI Codes Generation

Snapshot Tests

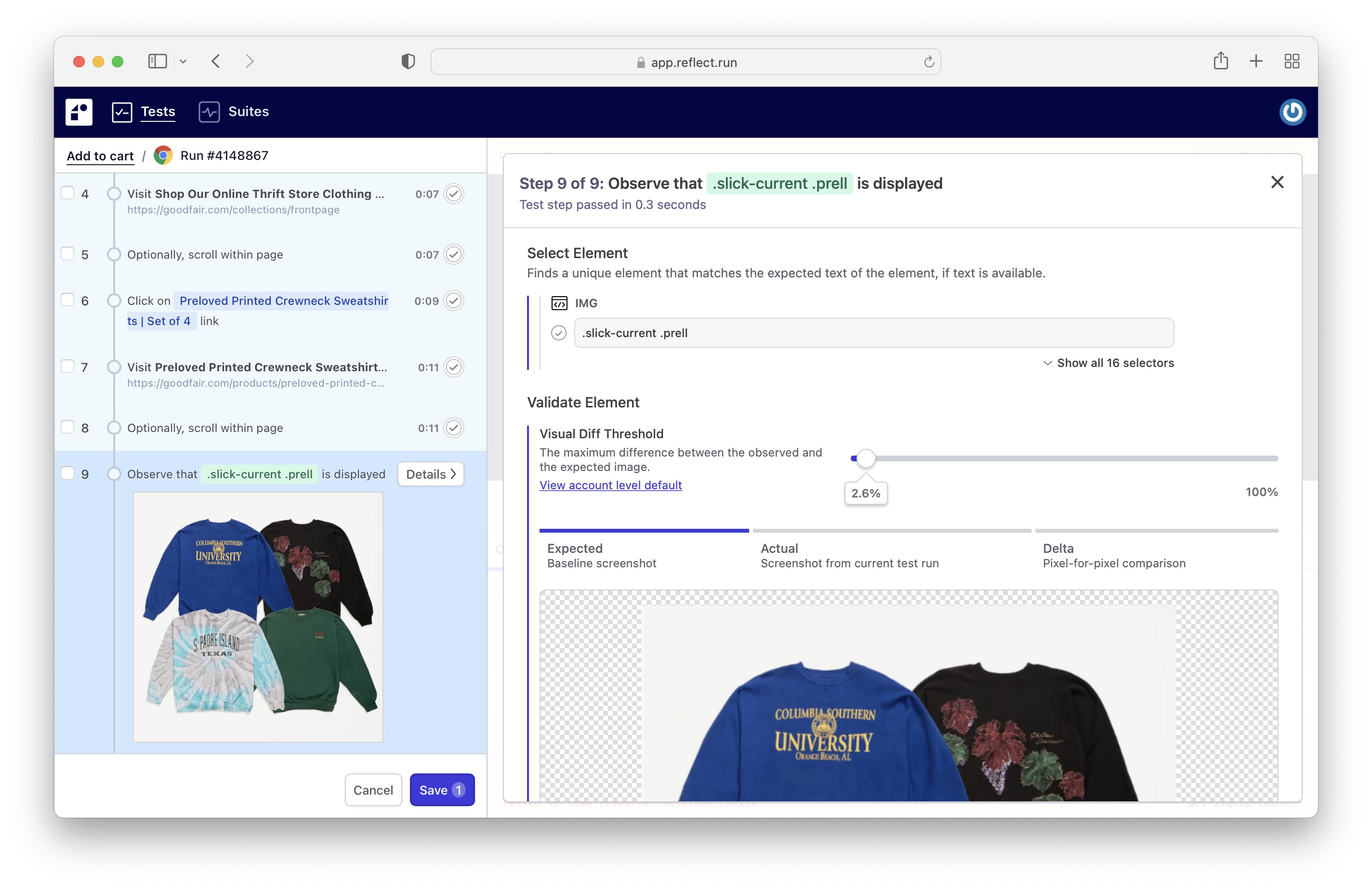

Snapshot testing is among the most commonly applied techniques in aesthetic testing. It involves capturing and assessing visual snapshots involving UI elements or even entire pages in opposition to a baseline (the predicted output). When AI-generated code changes, new snapshots are in comparison to the baseline. If there will be significant differences, the particular tests will flag them for assessment.

For AI program code generation, snapshot screening ensures:

Any AJE changes introduced simply by new AI-generated code are intentional plus expected.

Visual regressions (such as damaged layouts, incorrect hues, or misplaced elements) are detected automatically.

Tools like Jest, Storybook, and Chromatic are usually used throughout this process, supporting integrate snapshot assessment directly into growth pipelines.

DOM Element and elegance Testing

Throughout addition to examining how elements give visually, automated testing can inspect the particular Document Object Design (DOM) and assure that AI-generated signal adheres to predicted structure and design rules. By checking the DOM forest, developers can validate the presence associated with specific elements, WEB PAGE classes, and hair styling attributes.

For illustration, automated DOM screening ensures that:

Produced code includes necessary UI components (e. g., buttons, input fields) and spots them in typically the correct hierarchy.

WEB PAGE styling rules generated by AI fit the expected image outcome.

This technique complements visual assessment by ensuring both underlying structure as well as the visual appearance are generally accurate.

Cross-Browser Screening and Device Emulation

AI code generation must produce UIs that perform constantly across a selection of browsers and even devices. Automated cross-browser testing tools like Selenium, BrowserStack, and Lambdatest allow builders to run their particular visual tests around different browser environments and screen file sizes.

Clicking Here can also become employed to imitate how the AI-generated UIs appear in different devices, this kind of as smartphones plus tablets. This assures:

Mobile responsiveness: Created code properly gets used to to various monitor sizes and orientations.

Cross-browser consistency: The visual output continues to be stable across Chromium, Firefox, Safari, and also other browsers.

Pixel-by-Pixel Assessment

Pixel-by-pixel comparison equipment can detect the particular smallest visual discrepancies between expected and actual output. Simply by comparing screenshots regarding AI-generated UIs at the pixel level, automated tests can assure visual precision throughout terms of spacing, alignment, and colour rendering.

Tools love Applitools, Percy, and Cypress offer superior visual regression testing features, allowing testers to fine-tune their own comparison algorithms to account for minimal, acceptable variations when flagging significant faults.

This method is especially beneficial for detecting:

Unintended visual changes that will may not turn out to be immediately obvious to be able to the human eye.

Slight UI regressions triggered by subtle changes in layout, font rendering, or image position.

AI-Assisted Visual Assessment

The integration associated with AI itself straight into the visual testing process can be a developing trend. AI-powered visible testing tools just like Applitools Eyes in addition to Testim use machine learning algorithms to intelligently identify and even prioritize visual adjustments. These tools may distinguish between appropriate variations (such like different font object rendering across platforms) and true regressions of which affect user expertise.

AI-assisted visual testing tools offer advantages like:

Smarter analysis of visual shifts, reducing false possible benefits and making that easier for programmers to focus about critical issues.

Powerful baselines that modify to minor improvements in the design system, preventing unwanted test failures thanks to non-breaking modifications.

Best Practices with regard to Automating Visual Assessment in AI Program code Generation

Incorporate Visual Testing Early in the CI/CD Pipeline

To prevent regressions from hitting production, it’s crucial to integrate automated image testing into your continuous integration/continuous shipping (CI/CD) pipeline. By simply running visual checks as part associated with the development procedure, AI-generated code will be validated prior to it’s deployed, ensuring high-quality releases.

Arranged Tolerances for Acceptable Visual Differences

Its not all visual changes are bad. Some changes, such as small font rendering dissimilarities across browsers, are usually acceptable. Visual tests tools often enable developers to arranged tolerances for suitable differences, ensuring tests don’t fail intended for insignificant variations.

By fine-tuning these tolerances, teams is able to reduce the particular number of false positives and emphasis on significant regressions that impact the particular overall UX.

Test Across Multiple Conditions

As previously described, AI code generation has to produce steady UIs across diverse browsers and devices. Make sure you test AI-generated code in a variety of conditions to catch abiliyy issues early.

Use Component-Level Testing

Alternatively of testing whole pages or monitors at once, consider testing individual URINARY INCONTINENCE components. This approach helps to ensure profound results to isolate and fix concerns when visual regressions occur. It’s especially effective for AI-generated code, which often generates modular, recylable components for modern day web frameworks such as React, Vue, or perhaps Angular.

Monitor plus Review AI Type Updates

AI versions are constantly evolving. As new types of code generation models are implemented, their output might change in delicate ways. Regularly evaluation the visual impact of these up-dates, and use computerized testing tools to be able to track how produced UIs evolve more than time.

Conclusion

Automating visual testing regarding AI code generation is a crucial step up ensuring typically the quality, consistency, and even user-friendliness of AI-generated UIs. By profiting techniques like picture testing, pixel-by-pixel evaluation, and AI-assisted aesthetic testing, developers can effectively detect in addition to prevent visual regressions. When integrated straight into the CI/CD canal and optimized using guidelines, automated visible testing enhances the particular reliability and satisfaction regarding AI-driven development steps.

Ultimately, the aim is to ensure that AI-generated code not only functions correctly and also looks and feels right across different platforms and devices—delivering the optimal end user experience every moment.