AI-driven program code generation has garnered significant attention lately, revolutionizing the way application is developed. These systems, based about large language designs (LLMs), such as OpenAI’s Codex and Google’s PaLM, seek to instantly generate code from natural language directions, streamlining development and reducing the advantages of handbook coding. While AJE code generators have got shown promise inside producing efficient, well structured code, they appear with their own set of challenges—one of the most significant being the difficulty in achieving test observability.

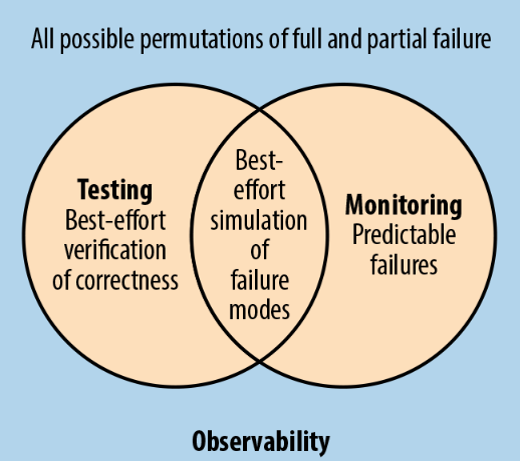

Test observability refers to the capacity to monitor and be familiar with behavior regarding a system in the course of testing. Inside the context of AI code generators, achieving observability means being able to detect and diagnose concerns in the generated code, understand the reason why some piece regarding code was produced, and ensure that this code meets the required functionality and top quality standards. This post explores the important challenges involved with achieving test observability intended for AI-generated code, why these challenges come up, and how they could be addressed in the future.

1. The Black Box Mother nature of AI Models

Challenge: Deficiency of Transparency

AI models, specifically large language models, operate largely since “black boxes. ” While these versions are trained on vast datasets and even use intricate neural networks to create predictions or generate outputs, the internal operation are not easily interpretable by people. This lack of transparency poses a key challenge when attempting to understand precisely why a specific piece involving code was developed. The model’s decision-making process, influenced simply by millions or enormous amounts of parameters, is usually not readily visible, making it challenging to trace the particular reasoning behind typically the generated code.

Effect on Test Observability:

Debugging and learning the root cause involving faulty or incorrect code becomes really difficult.

It is challenging to identify certain patterns in code generation errors, restricting developers’ capability to improve model performance.

Particularité in the generated code may proceed undetected due to be able to the insufficient visibility into the AJE model’s internal reasoning processes.

Potential Solution: Explainability Techniques

Establishing AI explainability strategies tailored for code generation could aid bridge this distance. For example, researchers will be exploring methods just like attention mechanisms plus saliency maps that highlight which parts of the type influenced the model’s output one of the most. Nevertheless, applying these strategies effectively to computer code generation tasks is still an open obstacle.

2. Inconsistent Behaviour Across Similar Advices

Challenge: Variability within Code Generation

One more challenge with AJE code generators is they can produce different code for typically the same or similar input prompts, which makes it difficult to predict the behaviour of typically the system consistently. Also small modifications in our quick can lead in order to significant variations in the generated computer code. This variability presents uncertainty and complicates testing, because the same test case may well yield different benefits in different iterations.

Impact on Check Observability:

Inconsistent behaviour causes it to be harder in order to establish reproducible analyze cases.

Detecting subtle bugs becomes more complex when typically the generated code differs unpredictably.

Understanding precisely why one version involving the code goes by tests while an additional fails is demanding, as the fundamental AI may react differently for reasons not immediately clear.

Potential Solution: Deterministic Code Generation

Efforts to make AI code generators a lot more deterministic could aid mitigate this issue. Methods such as temp control (influencing the particular randomness of the model’s output) or perhaps beam search (which explores multiple outcome possibilities before choosing the best one) may reduce variability. However, achieving perfect determinism without sacrificing creativity or flexibility remains difficult.

three or more. Limited Context Consciousness

Challenge: Difficulty throughout Handling Large Contexts

AI code generators rely heavily on the input prompt, but their ability to handle complex, long-range dependencies inside code is restricted. Intended for check this site out , when producing a large perform or module, typically the model may shed program important circumstance from earlier pieces of the code. This can make AI producing code that is syntactically correct but neglects to integrate correctly with the remaining project, introducing hidden bugs that are hard to detect.

Impact on Test Observability:

Lack of complete context understanding can result in the generation associated with incomplete or antagónico code, which is definitely difficult to catch within testing.

Misunderstandings involving the codebase’s overall structure can present subtle integration pests that go undetected during initial tests.

Potential Solution: Increased Memory Mechanisms

Improving the memory capability of AI designs, such as by making use of recurrent neural systems (RNNs) or transformer-based architectures with prolonged context windows, may help maintain better context awareness over longer stretches of code. However, even state-of-the-art models like GPT-4 struggle with really comprehensive memory across large projects.

5. Lack of Intent Comprehending

Challenge: Misalignment of Code with Consumer Intent

While AJE code generators exceed at following surface-level instructions, they often neglect to grasp the deeper intent right behind a user’s demand. This misalignment between user intent in addition to the generated computer code is a significant challenge for test observability because testing are designed to validate the particular intended functionality involving the code. Whenever the intent is misunderstood, the created code may go syntactic and unit tests but fail inside delivering the appropriate functionality.

Impact in Test Observability:

Checks may pass actually when the signal does not fulfill the intended goal, leading to false positives.

Debugging objective misalignments is difficult without clear insight into the AI’s reasoning process.

Developers may need to write additional tests to ensure that will not only syntax but also end user intent is fulfilled, adding complexity to be able to the test method.

Potential Solution: Refining Prompt Engineering

Enhancing prompt engineering approaches to better communicate the user’s objective could help arrange the generated computer code more closely with what the developer actually wants. Furthermore, feedback loops where developers provides modifications or clarification to be able to the model may help refine its end result.

5. Difficulty throughout Evaluating Code Quality

Challenge: Lack associated with Standardized Metrics regarding AI-Generated Signal

Evaluating the quality associated with AI-generated code is another major problem for test observability. Traditional metrics for code quality—such as readability, maintainability, plus performance—are challenging to evaluate in an automatic manner. While syntax and logic problems can be captured through traditional assessments, deeper issues just like code elegance, faithfulness to properly practices, or even performance optimizations are usually harder to determine.

Impact on Check Observability:

Important facets of code quality may be overlooked, leading to be able to poorly structured or perhaps inefficient code completing tests.

Developers will need to rely greatly on manual review or additional tiers of testing, which often reduces the efficiency gains of using the AI code electrical generator in the 1st place.

Potential Remedy: Code Quality Analyzers and AI Suggestions Loops

Integrating AI-driven code quality analyzers that concentrate on best practices, performance optimizations, and readability can complement traditional tests. However, building this kind of analyzers that can provide consistent, meaningful feedback on AI-generated code remains the technical challenge.

6th. Scalability Issues

Obstacle: Performance and Intricacy in Large Codebases

AI code generator may perform effectively in generating little functions or lessons, but scaling way up to larger assignments poses additional difficulties. As projects develop size and complexity, AI-generated code turns into more difficult to be able to test exhaustively. Test out coverage may decrease, leading to breaks in observability plus an increased chance of undetected problems.

Impact on Test Observability:

Large jobs make it more challenging to isolate problems in specific parts of the code.

Limited test insurance coverage increases the risk of bugs going undetected.

Testing becomes a lot more resource-intensive as codebases scale, reducing the velocity at which AI-generated code can be validated.

Potential Option: Modular Code Technology

One method to deal with scalability issues is definitely to design AJE models that make code in some sort of more modular vogue. By centering on producing small, self-contained models of code that will are easier in order to test individually, AI models could enhance test observability also in larger tasks. This modularity would likely also permit even more focused testing plus debugging.

Conclusion

Attaining test observability in AI code generator is a complex challenge that comes from the natural nature of AI systems. The black-box nature of AI models, variability inside output, limited circumstance awareness, as well as the problems in understanding consumer intent all contribute to the struggle of supervising and understanding the generated code’s habits during testing. Whilst solutions such as enhanced explainability methods, deterministic generation methods, and better fast engineering offer desire, the field will be still in the early stages.

To fully realize the prospective of AI code generators, developers need to continue to pioneer in ways that improve observability, allowing for more reliable, useful, and transparent computer code generation. Only next can AI really become a transformative force in software development.